Agent architectures

LangGraph - Agent architectures

Many LLM applications

Instead of

- An LLM can

route between two potential paths - An LLM can decide which of many

tools to call - An LLM can decide whether the

generated answer issufficient or more work is needed

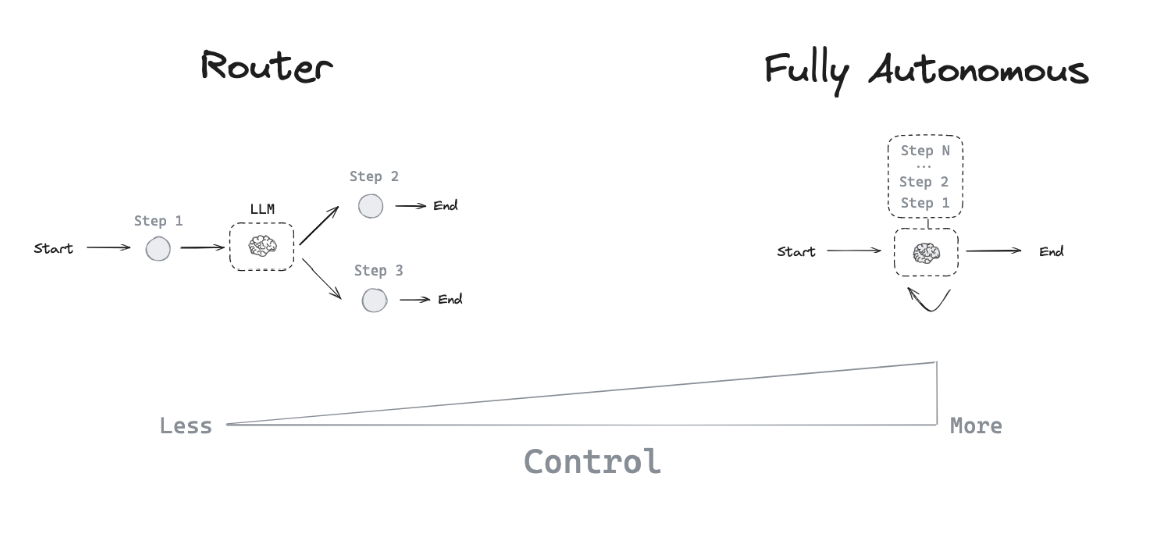

As a result, there are many different types ofagent architectures , which give an LLMvarying levels of control .

Router

A router allows an LLM to

Structured Output

Prompt engineering : Instructing the LLM to respond in a specific format via the system prompt.Output parsers : Usingpost-processing to extract structured data from LLM responses.Tool calling : Leveraging built-in tool calling capabilities of some LLMs to generate structured outputs.

Structured outputs are crucial for routing as they ensure the LLM’s decision can bereliably interpreted and acted upon by the system . Learn more about structured outputs in this how-to guide.

Tool calling agent

While a router allows an LLM to make a single decision, more complex agent architectures

Multi-step decision making : The LLM can make a series of decisions, one after another, instead of just one.Tool access : The LLM can choose from and use a variety of tools to accomplish tasks.

ReAct is a populargeneral purpose agent architecture that combines these expansions, integrating three core concepts.Tool calling : Allowing the LLM to select and use various tools as needed.Memory : Enabling the agent to retain and use information from previous steps.Planning : Empowering the LLM to create and followmulti-step plans to achieve goals.

This architecture allows for more complex and flexible agent behaviors, going beyond simple routing to enabledynamic problem-solving with multiple steps . You can use it with create_react_agent.

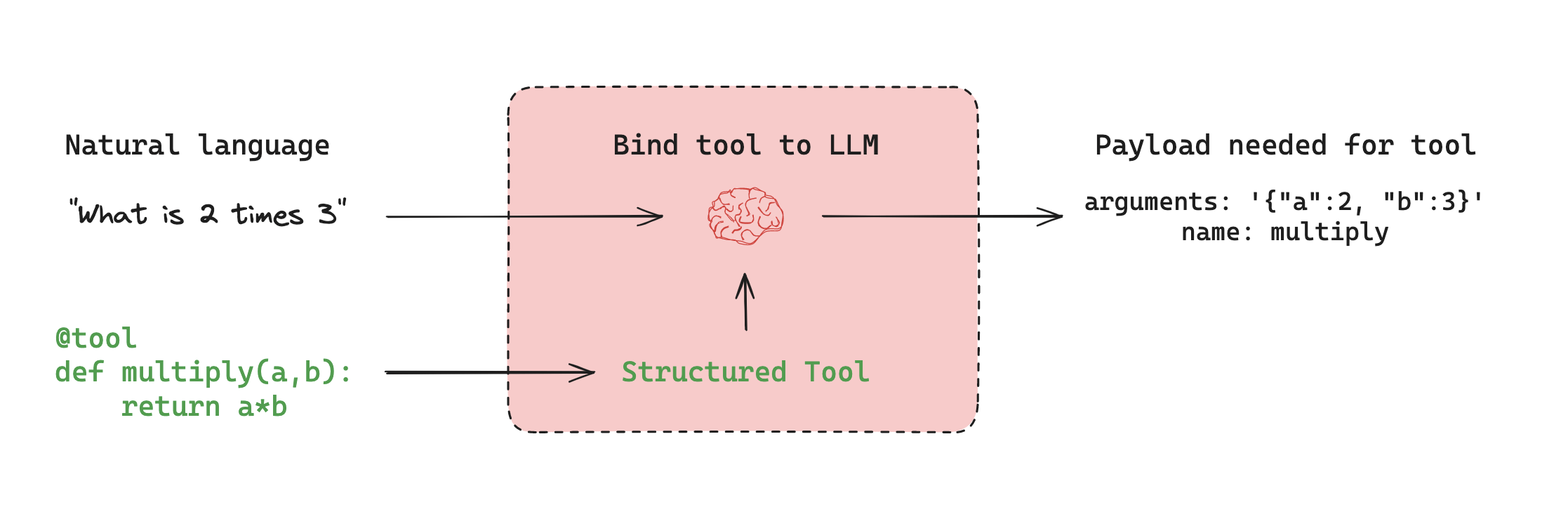

Tool calling

Tools are useful whenever you want an agent to

Many LLM providers support tool calling and tool calling interface in LangChain is simple: you can simply pass any Python function into ChatModel.bind_tools(function).

Memory

Short-term memory : Allows the agent to access information acquired during earlier steps in a sequence.Long-term memory : Enables the agent to recall information from previous interactions, such as past messages in a conversation.

LangGraph providesfull control over memory implementation :

State : User-defined schema specifying the exact structure of memory to retain.Checkpointers : Mechanism to store state at every step across different interactions.

This flexible approach allows you totailor the memory system to your specific agent architecture needs . For a practical guide on adding memory to your graph, see this tutorial.

Planning

In the ReAct architecture, an LLM is called repeatedly in a while-loop. At each step the agent decides which tools to call, and what the inputs to those tools should be. Those tools are then executed, and the outputs are fed back into the LLM as observations. The while-loop terminates when the agent decides it has enough information to

ReAct implementation

There are several differences between this paper and the pre-built create_react_agent

- First, we use tool-calling to have LLMs

call tools , whereas the paper usedprompting + parsing of raw output . This is because tool calling did not exist when the paper was written, but is generally better and morereliable . - Second, we use

messages toprompt the LLM , whereas the paper used string formatting. This is because at the time of writing, LLMs didn’t even expose a message-based interface, whereas now that’s the only interface they expose. - Third, the paper required all

inputs to the tools to be a single string. This was largely due to LLMs not being super capable at the time, and only really being able togenerate a single input . Ourimplementation allows for using tools that require multiple inputs. - Fourth, the paper only looks at

calling a single tool at the time , largely due to limitations in LLMs performance at the time. Ourimplementation allows forcalling multiple tools at a time . - Finally, the paper asked the LLM to explicitly generate a “Thought” step before

deciding which tools to call . This is the “Reasoning” part of “ReAct”. Ourimplementation does not do this by default, largely because LLMs have gotten much better and that is not as necessary. Of course, if you wish toprompt it do so , you certainly can.

Custom agent architectures

While routers and tool-calling agents (like ReAct) are common,

Human-in-the-loop

Human involvement can significantly

Approving specific actions Providing feedback to update the agent’s state Offering guidance in complex decision-making processes

Human-in-the-loop patterns are crucial when

Parallelization

Parallel processing is

Concurrent processing of multiple states Implementation of map-reduce-like operations Efficient handling of independent subtasks

For practical implementation, see our

Subgraphs

Subgraphs are essential for

Isolated state management for individual agents Hierarchical organization of agent teams Controlled communication between agents and the main system

Subgraphs communicate with the parent graph through overlapping keys in the state schema. This enables flexible,

Reflection

Reflection mechanisms can significantly

Evaluating task completion and correctness Providing feedback for iterative improvement Enabling self-correction and learning

While often LLM-based, reflection can also use

By leveraging these features, LangGraph enables the creation of

- 版权声明: 感谢您的阅读,本文由屈定's Blog版权所有。如若转载,请注明出处。

- 文章标题: Agent architectures

- 文章链接: https://mrdear.cn/posts/llm_agent_architectures