Tools - ClaudeCode和Jetbrains使用Cloudflare AI Proxy

前言

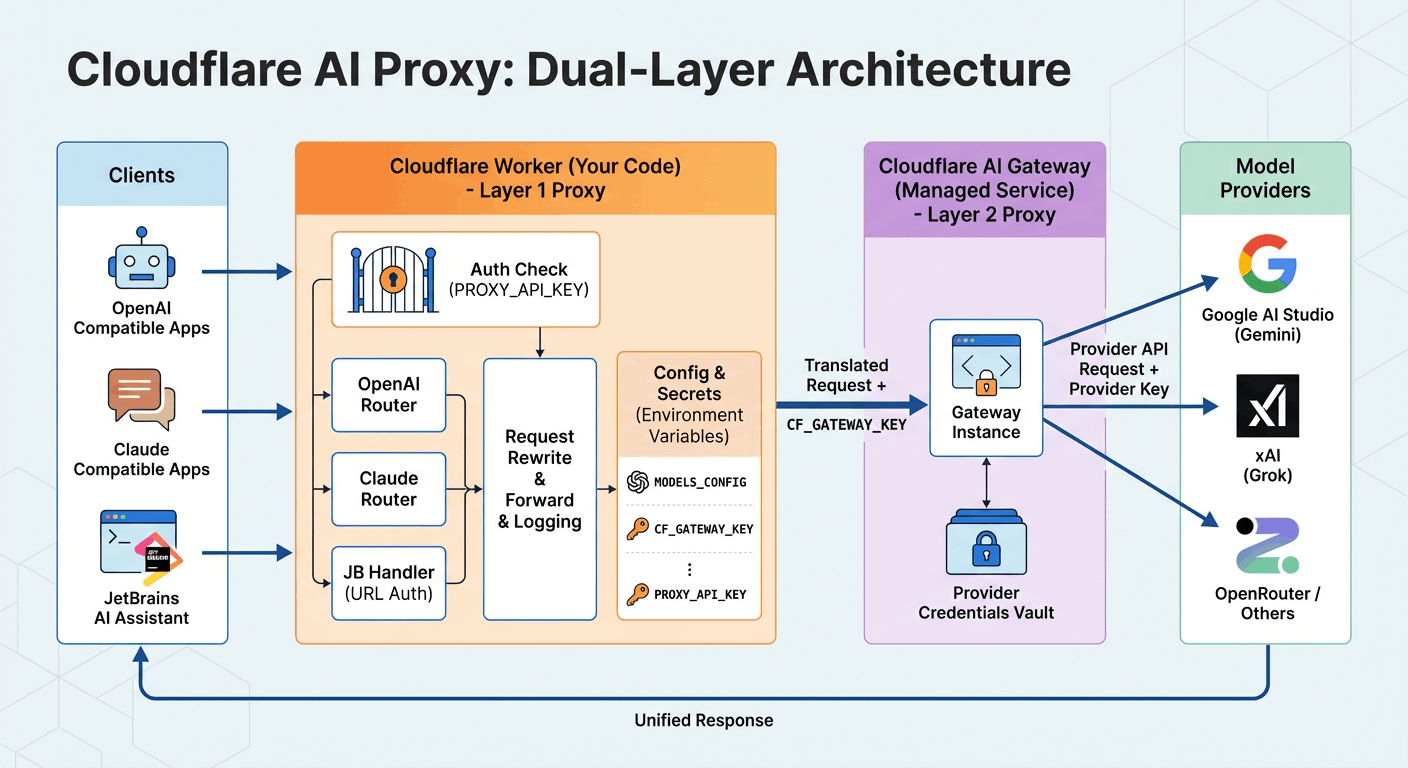

突然发现Cloudflare推出了ai gateway,相比之前worker proxy中自己需要写大量代码的方式,当前只需要做一层代理转发就可以轻松在各种工具中使用,相比其他方案需要本地装个软件,这种云端方案更加让我自己满意(非常讨厌本地运行各种开HTTP Server的服务)

项目特性

- 🔄 OpenAI/Claude API兼容:

/chat/completions、/v1/messages、/models - 🤖 JetBrains原生支持:URL认证(

/jb/<key>),无需自定义header - 📊 请求日志:内置日志记录

- 多模型配置:Gemini、Grok等,通过AI Gateway灵活扩展

部署项目

详细的使用见README,仓库写的比较详细,因此这里不再赘述。

项目地址:https://github.com/mrdear/cloudflare-ai-proxy

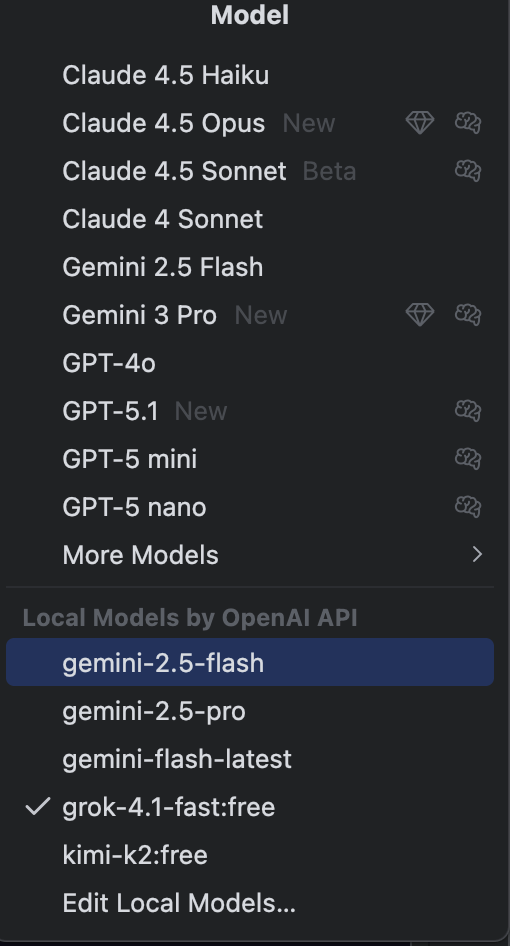

Jetbrains配置

Settings→Tools→AI Assistant->Models- 点击

+添加Provider →OpenAI Compatible - Base URL:

https://your-worker.workers.dev/jb/YOUR_PROXY_API_KEY- 替换

your-worker.workers.dev和YOUR_PROXY_API_KEY

- 替换

- 点击

Test Connection

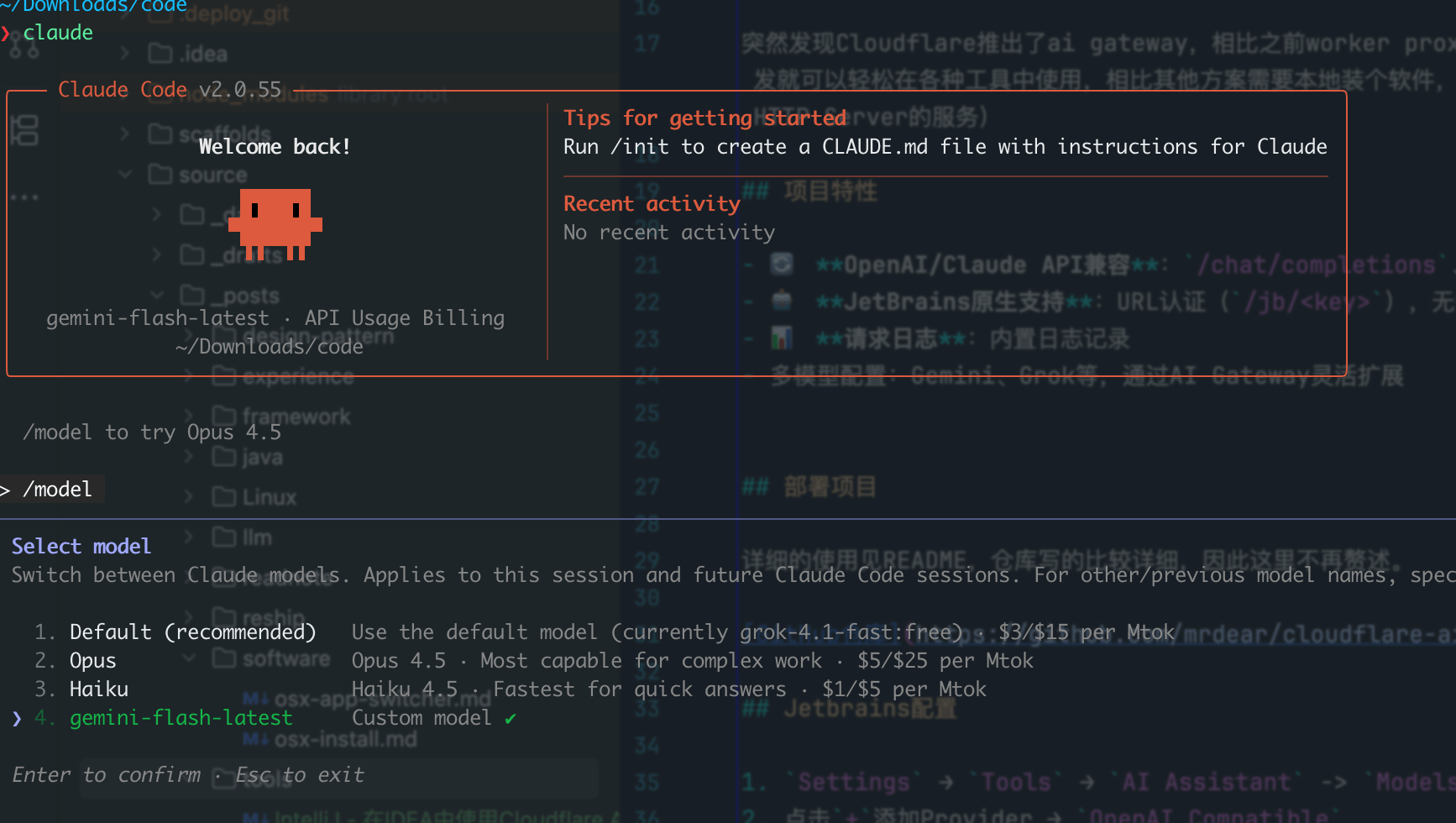

Claude Code配置

- open

~/.claude/setting.json - 写入如下配置

1

2

3

4

5

6

7

8"env": {

"ANTHROPIC_AUTH_TOKEN": "YOUR_PROXY_API_KEY",

"ANTHROPIC_BASE_URL": "https://your-worker.workers.dev",

"ANTHROPIC_DEFAULT_HAIKU_MODEL": "your model like gemini-flash-latest",

"ANTHROPIC_DEFAULT_OPUS_MODEL": "your model like gemini-2.5-pro",

"ANTHROPIC_DEFAULT_SONNET_MODEL": "your model like grok-4.1-fast:free",

"ANTHROPIC_MODEL": "your model like gemini-flash-latest"

} - 重启claude

API使用示例

列模型:

1 | curl -H "Authorization: Bearer YOUR_PROXY_API_KEY" https://your-worker.workers.dev/models |

聊天(OpenAI风格):

1 | curl -H "Authorization: Bearer YOUR_PROXY_API_KEY" \ |

- 版权声明: 感谢您的阅读,本文由屈定's Blog版权所有。如若转载,请注明出处。

- 文章标题: Tools - ClaudeCode和Jetbrains使用Cloudflare AI Proxy

- 文章链接: https://mrdear.cn/posts/work-tools-cloudflare-ai-proxy